During Computex 2022, Nvidia announced the upcoming release of its first system reference designs powered by the Nvidia Grace CPU. Upon launch, Nvidia’s first CPU will help usher in the next generation of high-performance computing (HPC), enabling tasks such as complex artificial intelligence, cloud gaming, and data analysis.

The upcoming Nvidia Grace CPU Superchip and the Nvidia Grace Hopper Superchip will find their way into server models from some of the most well-known manufacturers, such as Asus, Gigabyte, and QCT. Alongside x86 and other Arm-based servers, Nvidia’s chips will bring new levels of performance to data centers. Both the CPU and the GPU were initially revealed earlier this year, but now, new details have emerged alongside an approximate release date.

Although Nvidia is mostly known for making some of the best graphics cards, the Grace CPU Superchip has the potential to tackle all kinds of HPC tasks, ranging from complex AI to cloud-based gaming. Nvidia teased that the Grace Superchip will come with two processor chips connected through Nvidia’s NVLink-C2C interconnect technology.

Joined together, the chips will offer up to 144 high-performance Arm V9 cores with scalable vector extensions as well as an impressive 1TB/s memory subsystem. According to Nvidia, its new design will double the memory bandwidth and energy efficiency of current-gen server processors. Some of the use-cases for the new CPU that Nvidia lists include data analytics, cloud gaming, digital twin, and hyper-scale computing applications.

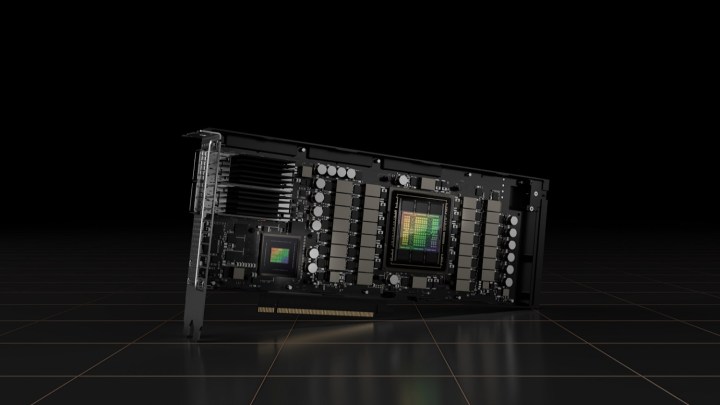

Launching alongside the Nvidia Grace is the Nvidia Grace Hopper Superchip, and although strikingly similar by name, the “Hopper” gives it away — this is not just a CPU. Nvidia Grace Hopper pairs an Nvidia Hopper graphics card with an Nvidia Grace processor, once again utilizing the same NVLink-C2C technology.

Combining the two has a massive effect on the speed of data transfer, making it up to 15 times faster than that of traditional CPUs. Both of the chips are impressive, but the Grace and Grace Hopper combo should be capable of facing just about any task, including giant-scale artificial intelligence applications.

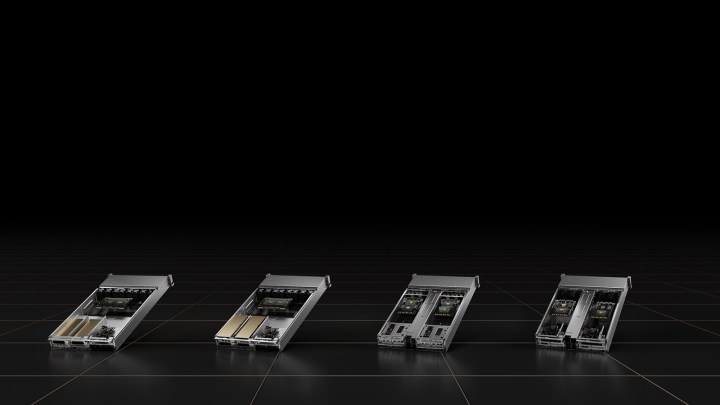

The new Nvidia server design portfolio offers single baseboard systems with up to four-way configurations available. These designs can be further customized based on individual needs to match specific workloads. To that end, Nvidia lists a few systems.

The Nvidia HGX Grace Hopper system for AI training, inference, and HPC comes with the Grace Hopper Superchip and Nvidia’s BlueField-3 data processing units (DPUs). There’s also a CPU-only alternative that combines the Grace CPU Superchip with BlueField-3.

Nvidia’s OVX systems are aimed at digital twins and collaboration workloads and come with a Grace CPU chip, BlueField-3, and Nvidia GPUs that are yet to be revealed. Lastly, the Nvidia CGX system is made for cloud gaming and graphics. It pairs the Grace CPU Superchip with BlueField-3 and Nvidia’s A16 GPUs.

Nvidia’s new line of processors and HPC graphics cards is set to release in the first half of 2023. The company teased that dozens of new server models from its partners will be made available around that time.

Editors' Recommendations

- AMD Zen 5: Everything we know about AMD’s next-gen CPUs

- Intel may fire the first shots in the next-gen GPU war

- Here’s why I’m glad Nvidia might kill its most powerful GPU

- Nvidia’s most important next-gen GPU is less than 2 weeks away

- Intel thinks your next CPU needs an AI processor — here’s why