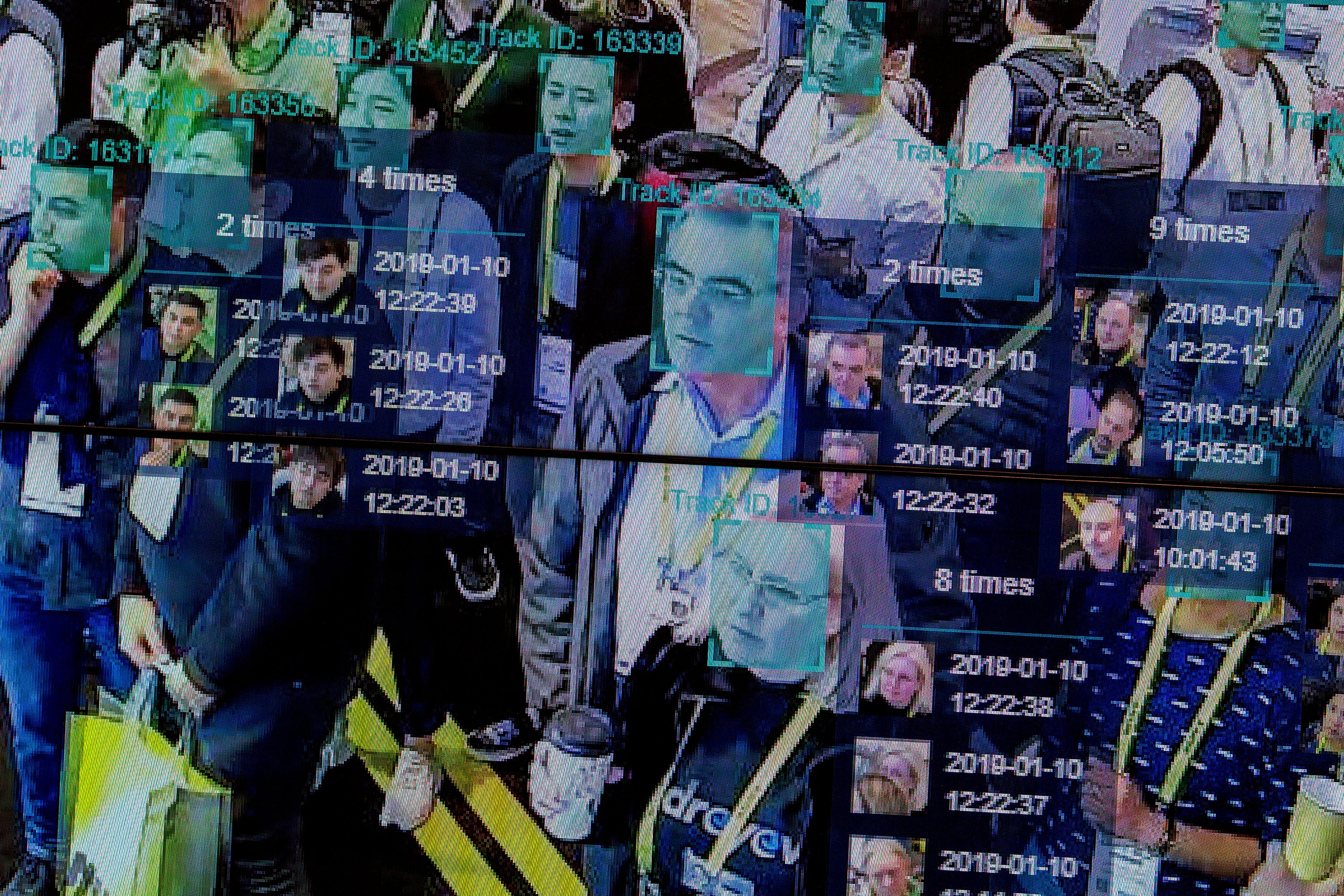

Face scanning and ‘social scoring’ AI can have ‘catastrophic effects’ on human rights, UN says

‘If you think about the ways that AI could be used in a discriminatory fashion ... it is pretty scary,” said US Commerce Secretary Gina Raimondo

The United Nations has urged a moratorium on artificial intelligence systems, such as face scanning and social credit systems, that could be a threat to human rights.

Michelle Bachelet, the high commissioner for human rights, said countries should ban AI applications that do not comply with international law.

Applications that should be prohibited include government “social scoring” systems that judge people based on their behaviour and certain AI-based tools that categorize people into clusters such as by ethnicity or gender.

AI-based technologies can be a force for good but they can also “have negative, even catastrophic, effects if they are used without sufficient regard to how they affect people’s human rights,” Bachelet said in a statement.

Her comments came with a new UN report that examines how countries and businesses have rushed into applying AI systems that affect people’s lives and livelihoods without setting up proper safeguards to prevent discrimination and other harms.

She didn’t call for an outright ban of facial recognition technology, but said governments should halt the scanning of people’s features in real time until they can show the technology is accurate, won’t discriminate and meets certain privacy and data protection standards.

While countries weren’t mentioned by name in the report, China in particular has been among the countries that have rolled out facial recognition technology — particularly as part of surveillance in the western region of Xinjiang where many of its minority Uyghers live.

The report also voices wariness about tools that try to deduce people’s emotional and mental states by analysing their facial expressions or body movements, saying such technology is susceptible to bias, misinterpretations and lacks scientific basis.

“The use of emotion recognition systems by public authorities, for instance for singling out individuals for police stops or arrests or to assess the veracity of statements during interrogations, risks undermining human rights, such as the rights to privacy, to liberty and to a fair trial,” the report says.

The report’s recommendations echo the thinking of many political leaders in Western democracies, who hope to tap into AI’s economic and societal potential while addressing growing concerns about the reliability of tools that can track and profile individuals and make recommendations about who gets access to jobs, loans and educational opportunities.

European regulators have already taken steps to rein in the riskiest AI applications. Proposed regulations outlined by European Union officials this year would ban some uses of AI, such as real-time scanning of facial features, and tightly control others that could threaten people’s safety or rights.

President Joe Biden’s administration has voiced similar concerns about such applications, though it hasn’t yet outlined a detailed approach to curtailing them. A newly formed group called the Trade and Technology Council, jointly led by American and European officials, has sought to collaborate on developing shared rules for AI and other tech policy.

Efforts to set limits on the riskiest uses have been backed by Microsoft and other US tech giants that hope to guide the rules affecting the technology they’ve helped to build.

“If you think about the ways that AI could be used in a discriminatory fashion, or to further strengthen discriminatory tendencies, it is pretty scary,” said US Commerce Secretary Gina Raimondo during a virtual conference in June. “We have to make sure we don’t let that happen.”

She was speaking with Margrethe Vestager, the European Commission’s executive vice president for the digital age, who suggested some AI uses should be off-limits completely in “democracies like ours,” such as social scoring that can close off someone’s privileges in society, and “broad, blanket use of remote biometric identification in public space.”

She said there’s something fundamental about being able to say, “I live in a real society. I’m not living in the trailer of a horror movie that I don’t want to see the end of.”

Additional reporting by Associated Press

Subscribe to Independent Premium to bookmark this article

Want to bookmark your favourite articles and stories to read or reference later? Start your Independent Premium subscription today.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies