The automation industry is experiencing an explosion of growth and technology capability. To explain this complex technology, terms such as artificial intelligence (AI) are used to convey the idea that solutions are more capable and advanced than ever before.

Types of vision systems used in warehousing and distribution environments

There are three primary applications of vision systems used in warehousing and distribution environments. They are inspection and mapping, pick-and-place without deep learning and pick-and-place with deep learning. It is crucial to note that all three types of vision systems include three main elements -- an input (camera), a processor (computer and program) and an output (robot). They may use similar cameras and robots, but the difference lies in the program.

Inspection and mapping. Vision systems for inspection are used in a variety of industrial robot applications, providing outputs of “pass/fail,” “present/not present” or a measurement value. The result dictates the next step in a process. An example is using a vision system in a manufacturing cell to check for quantity present, color or other pre-defined attributes (e.g., 3 red, 1 yellow, 2 blue). The results are communicated to an external processing system that takes a prescribed set of pre-determined actions.

Mapping systems are less frequently used but are similar to inspection systems in that vision maps do not directly translate into machine action. An example is vision-navigation-based mobile robots. The map is created and stored in a database. The desired routes are pre-calculated. When the robot is driving through the system along pre-programmed paths, the vision system provides the ability to determine the robot X-Y position on a known map. An external routing algorithm provides instructions to the robot (continue forward, turn left, etc.) using the known map and the live camera feed.

Inspection and mapping systems can be very sophisticated, including the routing algorithms that guide the mobile robots, but they do not require deep learning or AI.

Pick-and-place without deep learning. Pick-and-place vision systems with limited variables are deployed on most robotic cells installed today. The cameras direct the robot’s motion through closed-loop feedback, enabling the robots to operate very quickly and accurately, within their prescribed parameters. These systems do not have a “learning loop” but are instead pre-programmed for a fixed set of objects and instructions. While these systems are “smart,” they do not add intelligence or learning over time.

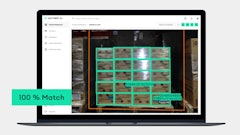

Pick-and-place with deep learning. The most sophisticated vision systems employ deep learning. These systems are often described with sensational terms such as AI. Complicating things further, many non-learning systems are marketed as if they have intelligent (learning) capability, leading to confusion. Deep learning systems are a type or subset of AI. Deep learning engineers use a small set of objects as the learning base and teach the computer program (algorithm) to recognize a broad array of objects based on the characteristics of a small sample. For example, if you can recognize a few types of stop signs, you can apply that knowledge to recognize many types of stop signs. The deep learning program learns features that are independent of the objects, so that it can generalize over a wide spectrum of objects. For example, through such a program, robots can recognize the edge of an object no matter the exposure of the camera or the lighting conditions. Deep learning systems do not rely on a single variable, such as color, because something as simple as an exposure change or lighting would ruin the result. Color may be one of the variables, but additional more abstract variables are used for object recognition in the deep-learning program. By way of comparison, these deep learning systems used for robotic picking applications are like driving a Tesla in full autonomous mode. Park anywhere and navigate from A to B using the best travel route in (most) any weather condition, on all road types.

Click here to hear more about robotics in the supply chain:

Basic building blocks for deep learning systems

Deep learning principles used by industrial robots and self-driving cars are similar. Self-driving cars recognize different shapes, sizes, colors and locations for stop signs. Once identified as a stop sign, the algorithm calculates a response based on external variables, such as location and direction of movement of other cars, pedestrians, road features, etc. and those calculations must be fast.

Vision-guided robots with deep-learning programs for industrial applications recognize various types of packaging, location and other variables (e.g., partly buried under other packaging) and direct machine action based on those variables. Compared to self-driving cars, some of the variables for industrial robots are not as complex, but the underlying approach to learning and responding quickly is the same.

There are three requirements for deep learning solutions -- computer processing power, high-quality and varied data and deep learning algorithms. Each requirement is dependent on the other.

Deep learning vision systems for vision-guided industrial robots

Commercial applications using robots to pick, place, palletize or de-palletize in a warehouse environment require three basic building blocks -- cameras, software and robots. The cameras and robots are the eyes and arms; the software is the brain. All three components must work together to optimize system performance.

Camera technology enables the flow of high-quality data. Cameras and post image processing provide a stream of data ready for the deep-learning algorithm to evaluate. Some cameras are better suited for an application, but that itself is not what makes a vision-guided robot capable of deep learning. The camera supplies data but does not translate data into actionable commands.

This is where the role of software comes in, which is the deep learning algorithm – data in from cameras, process, results out to robots.

The robot and end-effector (a.k.a. gripper) also play a critical role in system performance. They must provide the level of reach, grip-strength, dexterity, and speed required for the application. The robot and end-effector respond to commands from the deep learning algorithm. Without the deep learning algorithm, the robot would respond to pre-programmed, pre-configured commands.

To summarize, there are three points to remember about AI and vision-guided robotic systems:

• Deep learning algorithms classify data in multiple categories.

• Deep learning algorithms require both high-quality and varied data.

• Algorithms become more powerful over time.

Latest developments in camera technology and computer processing power serve as building blocks to advanced deep learning software that improves robot performance. The future has arrived.